コネクタープライベートネットワーキングの紹介 : 今後のウェビナーに参加しましょう!

Implementing mTLS and Securing Apache Kafka at Zendesk

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

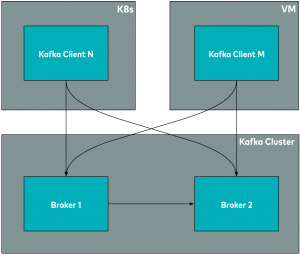

At Zendesk, Apache Kafka® is one of our foundational services for distributing events among different internal systems. We have pods, which can be thought of as isolated cloud environments where our products run, spread out all across the world. Each Zendesk pod has its own Kafka cluster, consumers, and producers that run on Kubernetes (K8s) and virtual machines (VMs) and communicate with Kafka brokers.

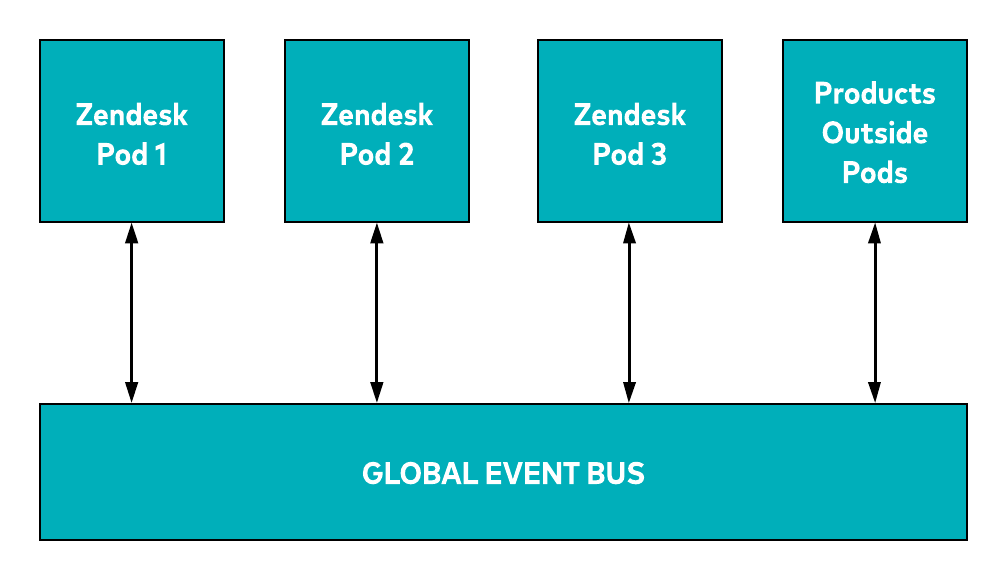

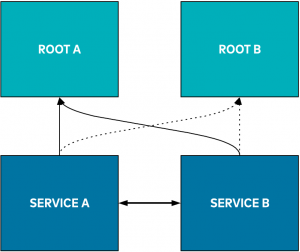

The arrows in the above diagram represent network communications, which manifest in CLEARTEXT in a default Kafka installation. We also have in place internal Kafka clusters using plaintext communication and additional security mechanisms. Originally, these Kafka clusters were isolated due to network boundaries, but we were still exposed to the risk of an attacker potentially bypassing those mitigations. In addition, we wanted to build a global event bus to propagate events among Zendesk pods and also between Zendesk pods and products running outside of the Zendesk pods. To secure the inter-pod communication, we needed to encrypt the communication channels and enable authentication and authorisation. These communication lines are represented by the arrows in the following diagram.

To secure the inter-pod communication, we needed to encrypt the communication channels and enable authentication and authorisation. These communication lines are represented by the arrows in the following diagram.

This blog post describes how we addressed these needs and built a self-hosted mTLS authentication system for our Kafka clusters using open source tools. It also identifies the performance impacts of using mTLS and shares how to make client onboarding easier, as well as details how to rotate a self-signed root certificate of a private certificate authority (CA) without downtime. A proof-of-concept (POC) project link demonstrating root CA rotation is likewise included.

Problem statement

Unauthenticated clients could connect to Kafka, which presented a security threat. Although we had additional security measures in place, we were still exposed to the risk of an attacker potentially bypassing those mitigations.

Key requirements and expectations

We wanted to update our Kafka clusters so that only authenticated clients could connect to Kafka via an encrypted connection to access authorised topics. Ideally, we wanted the solution to provide the following:

- Automated certificates with key pair generation and regeneration

- Certificate revocation

- CA certificate rotation that would be automated and quick

- Logging and metrics collection for observability

- Easy client onboarding

Authentication considerations

After evaluating the security solutions available in Kafka, we decided to implement mutual TLS (mTLS) authentication because we wanted to regularly perform key rotation and needed both authentication and authorisation. TLS was deemed a better fit over SASL for our use case. The other option, Kerberos, was not feasible as it required new infrastructure.

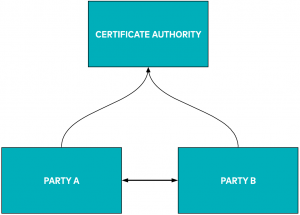

mTLS 101

In a typical mTLS setup, there’s a trusted CA that issues certificates to parties for secure communication over an untrusted network. In addition to issuing certificates, the CA also provides a way to check if the certificate issued is still valid.

Vault as a CA

For our use case, we decided to build a public key infrastructure (PKI). Kafka provides robust support for TLS, including hot-swapping of certificates in brokers, so we needed to establish a trust anchor (root CA). Because certificate issuing is crucial for a foundational service, the service issuing certificates to the foundational services must be trusted and secure. We discovered that Vault, a product from HashiCorp used to manage secrets for applications, has a PKI backend. When it is enabled, you can use it to issue certificates (CA) signed by the trust anchor (root CA).

We decided to go with a private CA because it would let us contain the chain of trust within our private network and have the freedom to rotate our root certificate. Furthermore, public CAs only issue certificates for the public domain, so each of our Kafka brokers and clients would have had to be publicly addressable, which we could not do.

Solution components

Based on the expectations outlined above, the following describes the components of the solution that we rolled out into production.

Vault preparation

We had to prepare our Vault cluster to work as our CA for this new use case. We built reusable Terraform modules to be able to set up generic CAs quickly, and we used the following code to bring up a self-signed root CA in our Vault installation:

locals {

kafka_pki_prefix = "prefix"

kafka_broker_allowed_domains = ["broker.kafka.com"]

kafka_client_allowed_domains = ["client.kafka.com"]

}

module "root_a" {

source = "./common/kafka_pki"

path = "${local.kafka_pki_prefix}/root-a"

root_ca_common_name = "Root A"

vault_root_url = "${var.vault_addr}"

broker_allowed_domains = "${local.kafka_broker_allowed_domains}"

client_allowed_domains = "${local.kafka_client_allowed_domains}"

}

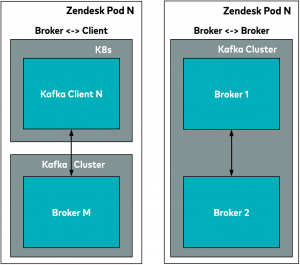

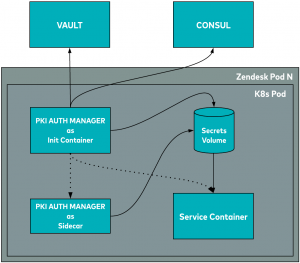

Client architecture

The illustration above shows a Kafka client running on Kubernetes. Any client running on Kubernetes must have the PKI auth manager sidecar running alongside. This generates certificates into a shared volume for the client to read from while communicating with Kafka over TLS. It can also regenerate the certificate before it expires. We also run it as an init container so that the Kafka client always has the certificate available when it boots up.

The setup for clients running on VMs is a bit more involved. It is available as an option, though we won’t be covering that topic in this post.

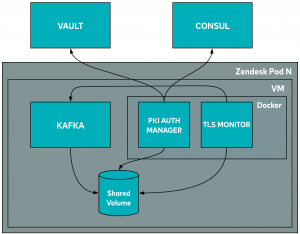

Kafka broker architecture

On the Kafka broker VMs, we run the same PKI auth manager component to take care of the certificates inside Docker. In what follows, we will discuss the TLS monitor component and what Consul does in both the client and server setup.

PKI auth manager

The PKI auth manager is a wrapper around the consul-template. Some of the consul-template’s features include that:

- It can run as an one-off script or as a daemon

- It can query consul and Vault to render templates

- It understands TTLs and can regenerate secrets before they expire

- It can execute arbitrary scripts when updates happen

We use a custom script for logging, metrics collection, and transformation of certificates each time they are generated or regenerated.

....

{{- with $d := key $consul_key | parseJSON -}}

{{- $pki_issuer := $d.primary_issuer -}}

....

{{- with secret $pki_path $common_name_param $ttl_param -}}

....

{{- range $secondary_path := $d.secondary_issuers -}}

....

{{- with secret $path }}

....

....

....

The first line in the code snippet above is an example of reading from Consul. The third and fifth lines are examples of reading a secret from Vault, in this instance, generating PKI certificates. There’s no mention of regenerating certificates in the template because it happens transparently. The consul-template keeps track of all the secrets that it has generated and regenerates them automatically before they expire. This ensures that we always have valid PKI certificates.

When invoking consul-template as part of the template argument (consul-template … -template "…:..:/script"), you can pass in a path to the script that runs every time the template output changes. You are free to do what you want with that script.

The custom script that we use with the consul-template takes the PEM-formatted certificate returned by Vault and converts it to formats requested by the client. Different languages and frameworks use different certificate formats. For example, JVM languages tend to use keystore, while others tend to use PEM-formatted certificates. Our script also performs audit logging.

The PKI auth manager takes care of certificate rotation, provided that the service has a way of reloading the certificate when it changes on the file system or when Kafka starts rejecting the expired one. We can enforce a very short TTL for all our client certificates, which is the recommended security practice.

TLS monitor

As you may recall, we have a component called the TLS monitor running in our Kafka broker VMs.

The TLS monitor is another Docker container that watches for changes in certificates in the shared volume. When the PKI auth manager makes changes, the TLS monitor asks Kafka to hot reload them. Kafka is capable of reloading the keystore or truststore without restarting. It also collects useful TLS certificate-related metrics and sends them to our monitoring system. This enables us to create alerts to notify us when things go wrong.

Security considerations

Certificate revocation

In the solution that we were building, we wanted the ability to revoke individual certificates. Vault’s PKI backend provides a certificate revocation list (CRL) and API and UI functionality to revoke individual certificates; however, it’s the responsibility of the participating parties to check the status of certificates during TLS negotiation. We realized that JVM doesn’t have strong support for revocation checks, which is where Kafka and a few of our other services run. Because revocation checks have to be enabled on the JVM level, this means it also checks the revocation status of public TLS certificates for every TLS negotiation (the service might use other HTTPS API endpoints). This is a problem because revocation status checks of the public internet are widely broken.

As a result, we had to compromise on this expectation even though it was a security and compliance requirement that we had to satisfy in order to push the global event bus into production. To compensate, we decided to implement a robust CA root certificate rotation strategy instead. If a security emergency were to occur, we could rotate all certificates throughout our systems.

Root CA rotation

Because individual certificate revocation was not an easy, quick, and automated option, root CA rotation became crucial. Here’s a look at root CA rotation:

In the illustration above, service A and service B use a certificate issued by root A to communicate using mTLS securely. Rotating the root CA means that we want to change our root CA to root B, ideally without any disruption in the availability of service A or service B. The process looks simple in the diagram, but it’s not that easy. Changing the root certificate is easy; the hard part is ensuring that, during the transition, the services can continue talking using mTLS.

A safe rotation requires:

- Somewhere to store the current root (source of truth)

- Something to run beside Kafka brokers and clients to regenerate certificates once the current root changes

- A way to broadcast to all those running services that the current root has changed

- A rotation that can happen in a reasonable amount of time in case of security incidents

- A way to automatically reload the regenerated certificates to Kafka brokers and clients, ideally without restarting them

That’s where Consul comes into play, another product from HashiCorp. It possesses a number of capabilities, but for this particular situation, we are using it as a distributed key-value (KV) store.

resource "consul_keys" "root" {

key {

path = "some/path/in/consul"

value = <<-EOT

{

"primary_issuer": "kafka-pki/root-a",

"secondary_issuers": []

}

EOT

}

}

To facilitate the root CA rotation, we use a globally replicated Consul key that holds references to the primary root and optional secondary roots.

{{- with $d := key $consul_key | parseJSON -}}

The line above is from the template provided earlier in this blog post. The key API function uses a feature of Consul called blocking query to watch for changes in a Consul key. It roughly translates to a HTTP GET API call like the following:

GET /v1/kv/path-to-consul-key?index=10&wait=1m0s

This basically tells the client to wait for up to one minute for any changes subsequent to that index for that key. The HTTP request above will hang until a change in the key occurs, or until the timeout is reached.

But doesn’t blocking queries seem inefficient?

HTTP long polling usually comes with a cost on the server, especially when lots of clients are interested in a piece of data. But it’s actually pretty performant in this case. The long polling HTTP call from the client (consul-template) is placed on the local Consul agent via the loopback interface. The local Consul agent still uses a single multiplexed TCP connection to the Consul server. The server definitely has to do some work for managing subscriptions to changes for that key, but it’s not very expensive.

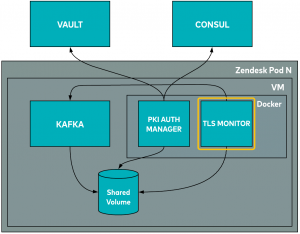

To reiterate, consul-template in conjunction with the Consul key gives most of what is needed for the rotation: storage for the current root, broadcast of changes to the current root, and quick action when the root changes. Now, we just need a way to reload the regenerated certificates to Kafka brokers and clients. For brokers, that’s where the TLS-Monitor component comes into play.

The TLS-Monitor component is another Docker container that watches for changes on keystore/truststore files and asks Kafka to reload them when they change. Kafka can hot reload certificates without requiring a restart. Other than watching certificates, it also collects certificate validity information from brokers and sends metrics into our monitoring system.

Now that we have got everything we need in order to perform a CA certificate rotation, let’s look at how we actually do it.

CA root certificate rotation dance

When we are not in the middle of a rotation, the Consul key only holds a reference to a primary certificate issuer. Here’s what it looks like:

{

"primary_issuer": "kafka-pki/root-a",

"secondary_issuers": []

}

This means:

Certificate Root: root-a Additional Trust Roots: []

secondary_issuers become useful during rotation because there will be a period of time when we start moving towards the new root B, though some services are still using root A. During that period, services (brokers/clients) have to trust both of the roots.

When we decide to rotate the root, we have to introduce a new root called “root-b”:

{

"primary_issuer": "kafka-pki/root-a",

"secondary_issuers": ["kafka-pki/root-b"]

}

This leads to the following:

Certificate Root: root-a Additional Trust Root: [root-b]

Once this change in value for the Consul key is propagated to all of the consul-template watchers, they will add the root certificate of root-b to the chain of trust for all services, but everything still uses certificates issued by root-a for mTLS communications.

We can now swap the roots:

{

"primary_issuer": "kafka-pki/root-b",

"secondary_issuers": ["kafka-pki/root-a"]

}

This leads to the following:

Certificate Root: root-b Additional Trust Root: [root-a]

This will again force the regeneration of certificates by all consul-template instances , which will use root-b for certificate generation while still keeping root-a in the chain of trust. This step provides services the opportunity to catch up to the new root. Once this change has been propagated, the old root, root-a, can be removed from the Consul key, as shown below:

{

"primary_issuer": "kafka-pki/root-b",

"secondary_issuers": []

}

The command above removes the old root from the chain of trust of all services and completes the rotation.

Performance impact of TLS

TLS has a cost associated with it, but the network traffic overhead from using TLS is negligible. The primary cause of performance impact is due to the encryption and decryption of data and losing zero-copy optimization.

On some preliminary tests that we ran in a test environment, we saw a 10%–15% increase in CPU usage from using mTLS. This is the result of a test that we ran on Kubernetes while evaluating mTLS; it is not an exact replica of a Zendesk pod setup. Therefore, it may not fully represent the impact that we may see in production in the longer term.

Reasons behind the performance impact

The reasons behind the performance impact include:

- mTLS has network traffic overhead

- encryption and decryption are CPU-intensive operations

- Kafka can’t take advantage of zero-copy optimization

- The performance of the JVM SSL engine is not as good as something like openssl, though it has become a lot more performant in JVM 9 and above

Zero-copy optimization

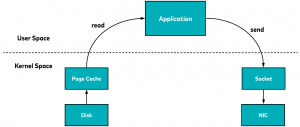

In a typical application running on a version of Linux, to send the contents of a file over the network, an app will usually use a read system call. This will cause the kernel to read the file from disk into page cache, if it’s not there already. It will then be copied into the application memory in the user space, and the application will use a send system call to copy it into the socket buffer. Finally, it will be copied again into the network interface card (NIC) buffer to be sent over the network.

If the format of the file is wire ready, there’s a lot of unnecessary copying and context switching happening that wastes CPU cycles and memory bandwidth.

However, the app can choose to use the sendfile system call instead to send the contents of the file from the page cache to the protocol engine directory to be sent over the network.

It’s even better when running Linux kernels 2.4 or later and on network interface cards that support the gather operation (where the data is similar to the previous case read into the read buffer). However with the gather operation, there is no copying between the read buffer and the socket buffer. Instead, the NIC is given a pointer to the read buffer, along with the offset and length. The CPU in this case is not involved at all in copying buffers.

This is especially helpful for garbage-collected languages, as this data never gets into the application memory space, so they won’t have to be garbage collected. If a Kafka broker running a version of Linux has a decent amount of free memory, the Linux kernel will transparently use it as page cache with almost zero penalties. It will be able to hold a lot of in-flight Kafka messages. Kafka will be able to send them to clients and other brokers quickly using a zero copy sendfile system call. In summary, a decent amount of page cache coupled with zero-copy optimization provides a big performance boost to Kafka.

Why do we lose zero-copy optimization with TLS?

Zero-copy optimization is omitted with TLS because a precondition for zero-copy optimization is this: What is in the disk is exactly what gets sent over the wire. This is true for Kafka, because Kafka uses a binary protocol and messages are stored and transported as byte streams.

Without TLS, we get zero-copy optimization out of the box because Kafka uses sendfile system calls. However, we have to encrypt everything with TLS. This requires us to copy the contents of the file into application memory in the user space, in which we won’t be able to use zero-copy optimization anymore.

This has a big performance impact on a system dealing with a lot of messages.

Kernel TLS to the rescue…maybe?

Kernel TLS is a Linux kernel feature that was introduced in version 4.13 that can mitigate the performance impact to some extent. In kernel TLS, the handshake, key exchange, certificate handling, alerts, and renegotiation still happen in the user space. Once the TLS connection is established, the application can set some options on the socket, including the negotiated symmetric encryption key. At that point, the application can use it as a normal socket and the kernel will take care of encryption transparently.

Fortunately, the application can use the sendfile system call again. This removes the unnecessary context switching/copying and improves TLS performance for any user space application. It’s uncertain when this kernel feature will be widely available and when JVM will take advantage of it, but it will be good to have.

Client onboarding

With a mutual TLS solution designed and implemented, how do we drive adoption among our clients? No good feature is good unless it’s being used.

We have done a few things to support this effort:

- We have written an easy-to-follow onboarding guide

- We have built tools to spin up a local Kafka cluster configured with mTLS using only a few commands

- We also built example Kafka clients configured with mTLS in different programming languages that work out of the box with the local Kafka cluster

- We are supporting early adopters and working closely with them to get their clients configured with mTLS

- We have built a tighter integration with Kubernetes

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kafka-consumer

namespace: default

labels:

project: kafka-consumer

team: event-streamer

annotations:

kafka-auth/enable: 'true'

kafka-auth/cname: 'kafka-consumer.kafka.com'

In the bolded code above, you can see which annotations enable Kafka authentication. It is a service that anyone in our organization can use to get TLS certificates taken care of.

At Zendesk foundation org, our goal is to relieve product developers of work that gets in their way. We want to enable them to concentrate on adding value for our customers by doing the heavy lifting ourselves. They shouldn’t be concerned with mTLS setup and security and the compliance requirements around it—only the necessary code changes in their clients, which allow them to use mTLS with Kafka. In this way, they can follow our onboarding guides and add these annotations into their Kubernetes manifest. These annotations will cause our auth manager container to be injected in their deployment by an admission webhook controller, taking care of certificates for them. The auth manager can also run in a daemon mode as a sidecar to watch for certificate expiration and regenerate them before they expire.

Because we take care of all the details of certificate generation, rotation, and root certificate rotation, product developers don’t need to worry about it. This also allows us to make changes to our mTLS setup behind the scenes in order to stay compliant and secure without product developers even noticing that anything has changed.

Lessons learned

Before coming to this solution, we held many brainstorming sessions and had to discard a few initial solutions, too. We have learned various lessons along the way, and here are some of the key ones that stand out.

The revocation check of public SSL certificates is widely broken

It seems that the certificate revocation check of public SSL certificates is a very hard problem to solve. Why does it matter in our case given that we are not using public SSL certificates? It matters because the revocation mechanism is supposed to be similar for private PKI infrastructures, too. However, the lack of proper tooling and library support makes it hard to implement revocation for private mTLS setups as well. Kafka doesn’t support a custom certificate revocation status check. There’s a JVM-level flag you can set to turn it on, but then revocation status of all TLS certificates will be checked, including public HTTPs ones, which has its own problems.

As previously mentioned, there are two ways to check the revocation status of a certificate:

- Certificate revocation list (CRL)

- Online certificate status protocol (OCSP)

However, these are not consistently implemented by public CAs and clients. Web browsers have inconsistent behaviours, too. What browsers do if the certificate is revoked varies widely.

Here’s an example of how this affects other unrelated TLS connections the service might make:

-Dcom.sun.security.enableCRLDP=true -Dcom.sun.net.ssl.checkRevocation=true

You can pass in these flags to enable the certificate revocation check with CRL on the JVM level. That’s what we did as a start.

This is our CRL URL (obfuscated):

https://somedomain.com/kafka-pki/crl

The certificate chain of *.somedomain.com is this, with a few intermediate CAs:

0 .. Sectigo Limited…. 1 .. The USERTRUST Network/CN=USERTrust RSA 2 .. CN=AddTrust External CA Root 3 .. CN=AddTrust External CA Root

One of the above intermediate CAs doesn’t provide a CRL, so the download of this file fails, causing a failed TLS handshake.

It’s ironic that the revocation check of our CRL URL itself failed. CRL was the only feasible option in our case. We figured it was too risky to turn it on for the whole JVM, so we had to let it go. Instead, we worked hard to compensate for it with a robust root certificate rotation mechanism.

Automating CA rotation of a live system is hard

We spent a lot of time coming up with the rotation mechanism that we have now. (We didn’t think it would take this much time!)

What about it was hard?

- The operationalization of the rotation without compromising availability

- Finding the right tools

We expected to find more tooling and information about it on the public internet, but little was available. Going with a very long TTL for the root certificate is a lot easier.

Our hope is that the service mesh and transparent proxies will eventually make application-level mTLS more manageable.

Additional details

We were able to rate limit the consumer and producer of the global event bus using the CNAME of their certificates as their identity. This enabled us to introduce new traffic in our clusters safely without disrupting existing workloads. We built mTLS infrastructure and tooling by keeping the ability to reuse it in mind. Even though we built it for Kafka, it can be used to implement mTLS for any other system. Other teams inside Zendesk are already reusing it for mTLS in their microservices ecosystem and we are working together to make it better.

Example CA rotation code

You can access a POC CA rotation project using the same strategy in this blog post at https://git.io/ca-rotation. Feel free to raise issues in the repo if you have any comments or questions.

Conclusion

At Zendesk, we were able to satisfy all the requirements that we were looking for in our Kafka solution, except for individual certificate revocation. In response, we built a robust, mostly automated CA root certificate rotation system that has worked as expected in both our staging and production environments.

If you’d like to learn more, check out my Kafka Summit session, where I share more details about securing Kafka at Zendesk.

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

このブログ記事は気に入りましたか?今すぐ共有

Confluent ブログの登録

Confluent’s Customer Zero: Building a Real-Time Alerting System With Confluent Cloud and Slack

Turning events into outcomes at scale is not easy! It starts with knowing what events are actually meaningful to your business or customer’s journey and capturing them. At Confluent, we have a good sense of what these critical events or moments are.

How To Automatically Detect PII for Real-Time Cyber Defense

Our new PII Detection solution enables you to securely utilize your unstructured text by enabling entity-level control. Combined with our suite of data governance tools, you can execute a powerful real-time cyber defense strategy.